I was rather bummed out the first time I noticed this oddity, and by my second drive I decided to figure out what was happening. I'll spare you the trip to Wikipedia if you haven't already looked this up yourself.

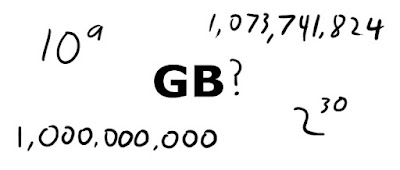

Turns out there are two ways of defining a Gigabyte: Either, as the name would seem to suggest, as 1 Billion Bytes (1,000,000,000), or as 1,073,741,824 bytes (2^30, or 1024^3).

Gigabyte

What?

The first definition makes sense. Giga = 10^9 or a billion, so a Gigabyte would be a billion bytes. Where does this other number come from?

Well, a byte is made up of 8 bits (either a 1 or a 0... binary). So computers start out dealing with things in 2s (1 or 0), and by extension, powers of 2: 16, 32, 64, 128, 256, 512, 1024... wait, we just saw this number. Someone somewhere realized that 1024 was fairly close to 1,000, so why not label the Megabyte (literally "one thousand bytes") as equal to 1024 bytes. Makes sense. Well, to geeks, and people like me who have grown up with geeky numbers like that.

But hard drive manufacturers didn't care that computers deal in powers of 2, and so built drives based on precise numbers of bytes. So, your hard drive has one billion bytes (1GB). But your computer thinks of a GB as 2^30. If you take your calculator and divide 10^9 by 2^30, you will end up with 0.93132257. Multiply that by the number of Gigabytes the box says, and you'll find out how many Gigs your computer will think it is.

I was playing with these numbers earlier today for a math video, so they're on my mind.

~Luke Holzmann

Your Media Production Mentor

1 comment :

Seems like the good people of XKCD have a comic about this too: Kilobyte.

Enjoy.

~Luke

Post a Comment